Designing Social Inquiry presents a unified approach to qualitative and quantitative research in political science, showing how the same logic of inference underlies both. This stimulating book discusses issues related to framing research questions, measuring the accuracy of data and the uncertainty of empirical inferences, discovering causal effects, and getting the most out of qualitative research. It addresses topics such as interpretation and inference, comparative case studies, constructing causal theories, dependent and explanatory variables, the limits of random selection, selection bias, and errors in measurement. The book only uses mathematical notation to clarify concepts, and assumes no prior knowledge of mathematics or statistics.

Featuring a new preface by Robert O. Keohane and Gary King, this edition makes an influential work available to new generations of qualitative researchers in the social sciences.

Gary King is the Albert J. Weatherhead III University Professor at Harvard University. His books include A Solution to the Ecological Inference Problem (Princeton). Robert O. Keohane is professor emeritus of international affairs at Princeton University. His books include After Hegemony (Princeton). Sidney Verba (1932–2019) was the Carl H. Pforzheimer University Professor Emeritus and research professor of government at Harvard. His books include Unequal and Unrepresented (Princeton). King, Keohane, and Verba have each been elected as members of the National Academy of Sciences.

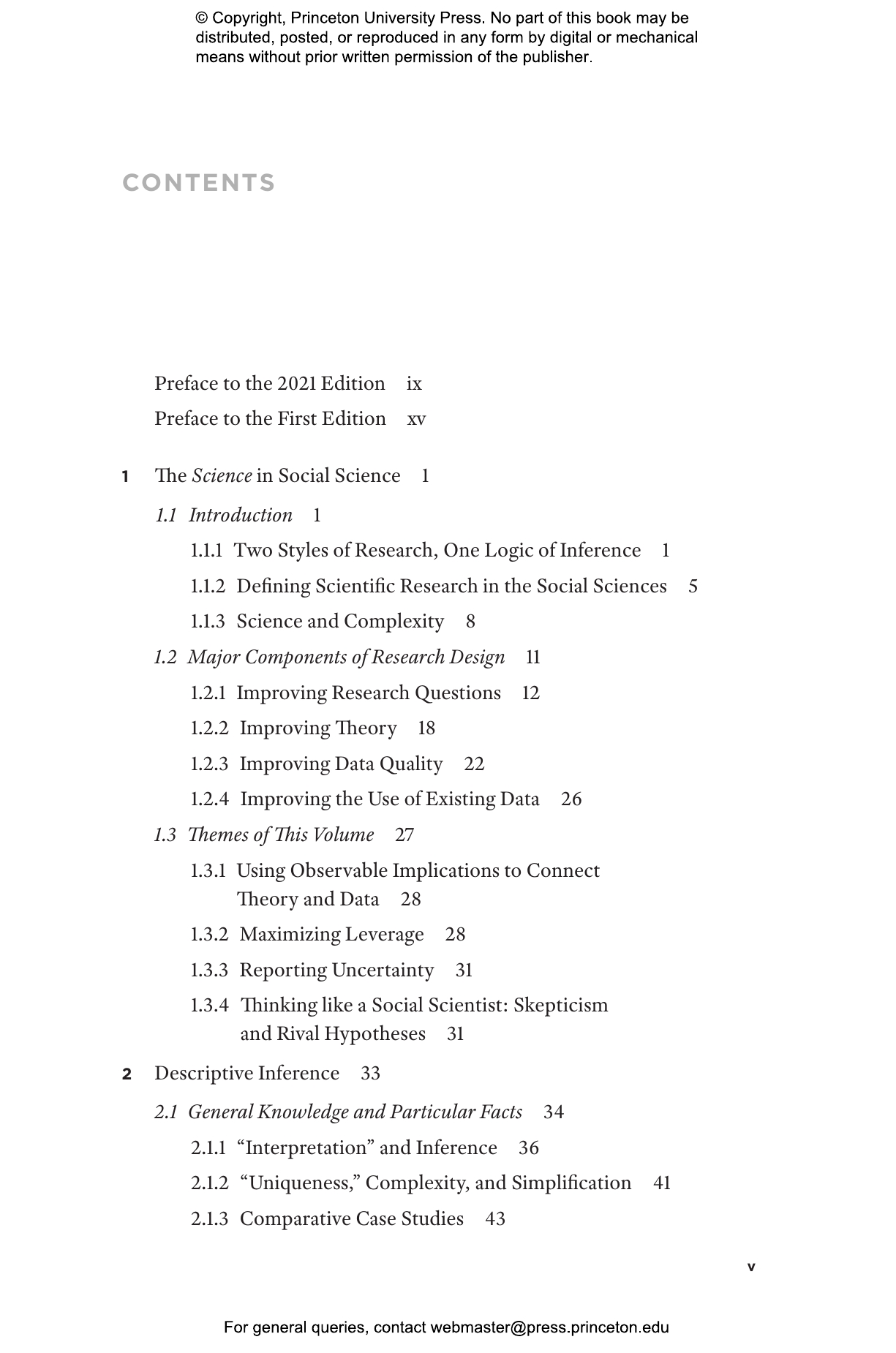

- Preface to the 2021 Edition

- Preface to the First Edition

- 1 The Science in Social Science

- 1.1 Introduction

- 1.1.1 Two Styles of Research, One Logic of Inference

- 1.1.2 Defining Scientific Research in the Social Sciences

- 1.1.3 Science and Complexity

- 1.2 Major Components of Research Design

- 1.2.1 Improving Research Questions

- 1.2.2 Improving Theory

- 1.2.3 Improving Data Quality

- 1.2.4 Improving the Use of Existing Data

- 1.3 Themes of This Volume

- 1.3.1 Using Observable Implications to Connect Theory and Data

- 1.3.2 Maximizing Leverage

- 1.3.3 Reporting Uncertainty

- 1.3.4 Thinking like a Social Scientist: Skepticism and Rival Hypotheses

- 2 Descriptive Inference

- 2.1 General Knowledge and Particular Facts

- 2.1.1 “Interpretation” and Inference

- 2.1.2 “Uniqueness,” Complexity, and Simplification

- 2.1.3 Comparative Case Studies

- 2.2 Inference: The Scientific Purpose of Data Collection

- 2.3 Formal Models of Qualitative Research

- 2.4 A Formal Model of Data Collection

- 2.5 Summarizing Historical Detail

- 2.6 Descriptive Inference

- 2.7 Criteria for Judging Descriptive Inferences

- 2.7.1 Unbiased Inferences

- 2.7.2 Efficiency

- 3 Causality and Causal Inference

- 3.1 Defining Causality

- 3.1.1 The Definition and a Quantitative Example

- 3.1.2 A Qualitative Example

- 3.2 Clarifying Alternative Definitions of Causality

- 3.2.1 “Causal Mechanisms”

- 3.2.2 “Multiple Causality”

- 3.2.3 “Symmetric” and “Asymmetric” Causality

- 3.3 Assumptions Required for Estimating Causal Effects

- 3.3.1 Unit Homogeneity

- 3.3.2 Conditional Independence

- 3.4 Criteria for Judging Causal Inferences

- 3.5 Rules for Constructing Causal Theories

- 3.5.1 Rule 1: Construct Falsifiable Theories

- 3.5.2 Rule 2: Build Theories That Are Internally Consistent

- 3.5.3 Rule 3: Select Dependent Variables Carefully

- 3.5.4 Rule 4: Maximize Concreteness

- 3.5.5 Rule 5: State Theories in as Encompassing Ways as Feasible

- 4 Determining What to Observe

- 4.1 Indeterminate Research Designs

- 4.1.1 More Inferences than Observations

- 4.1.2 Multicollinearity

- 4.2 The Limits of Random Selection

- 4.3 Selection Bias

- 4.3.1 Selection on the Dependent Variable

- 4.3.2 Selection on an Explanatory Variable

- 4.3.3 Other Types of Selection Bias

- 4.4 Intentional Selection of Observations

- 4.4.1 Selecting Observations on the Explanatory Variable

- 4.4.2 Selecting a Range of Values of the Dependent Variable

- 4.4.3 Selecting Observations on Both Explanatory and Dependent Variables

- 4.4.4 Selecting Observations So the Key Causal Variable Is Constant

- 4.4.5 Selecting Observations So the Dependent Variable Is Constant

- 4.5 Concluding Remarks

- 5 Understanding What to Avoid

- 5.1 Measurement Error

- 5.1.1 Systematic Measurement Error

- 5.1.2 Nonsystematic Measurement Error

- 5.2 Excluding Relevant Variables: Bias

- 5.2.1 Gauging the Bias from Omitted Variables

- 5.2.2 Examples of Omitted Variable Bias

- 5.3 Including Irrelevant Variables: Inefficiency

- 5.4 Endogeneity

- 5.4.1 Correcting Biased Inferences

- 5.4.2 Parsing the Dependent Variable

- 5.4.3 Transforming Endogeneity into an Omitted Variable Problem

- 5.4.4 Selecting Observations to Avoid Endogeneity

- 5.4.5 Parsing the Explanatory Variable

- 5.5 Assigning Values of the Explanatory Variable

- 5.6 Controlling the Research Situation

- 5.7 Concluding Remarks

- 6 Increasing the Number of Observations

- 6.1 Single-Observation Designs for Causal Inference

- 6.1.1 “Crucial” Case Studies

- 6.1.2 Reasoning by Analogy

- 6.2 How Many Observations Are Enough?

- 6.3 Making Many Observations from Few

- 6.3.1 Same Measures, New Units

- 6.3.2 Same Units, New Measures

- 6.3.3 New Measures, New Units

- 6.4 Concluding Remarks

- References

- Index