From social media posts and text messages to digital government documents and archives, researchers are bombarded with a deluge of text reflecting the social world. This textual data gives unprecedented insights into fundamental questions in the social sciences, humanities, and industry. Meanwhile new machine learning tools are rapidly transforming the way science and business are conducted. Text as Data shows how to combine new sources of data, machine learning tools, and social science research design to develop and evaluate new insights.

Text as Data is organized around the core tasks in research projects using text—representation, discovery, measurement, prediction, and causal inference. The authors offer a sequential, iterative, and inductive approach to research design. Each research task is presented complete with real-world applications, example methods, and a distinct style of task-focused research.

Bridging many divides—computer science and social science, the qualitative and the quantitative, and industry and academia—Text as Data is an ideal resource for anyone wanting to analyze large collections of text in an era when data is abundant and computation is cheap, but the enduring challenges of social science remain.

- Overview of how to use text as data

- Research design for a world of data deluge

- Examples from across the social sciences and industry

Justin Grimmer is professor of political science and a senior fellow at the Hoover Institution at Stanford University. Twitter @justingrimmer Margaret E. Roberts is associate professor in political science and the Halıcıoğlu Data Science Institute at the University of California, San Diego. Twitter @mollyeroberts Brandon M. Stewart is assistant professor of sociology and Arthur H. Scribner Bicentennial Preceptor at Princeton University. Twitter @b_m_stewart

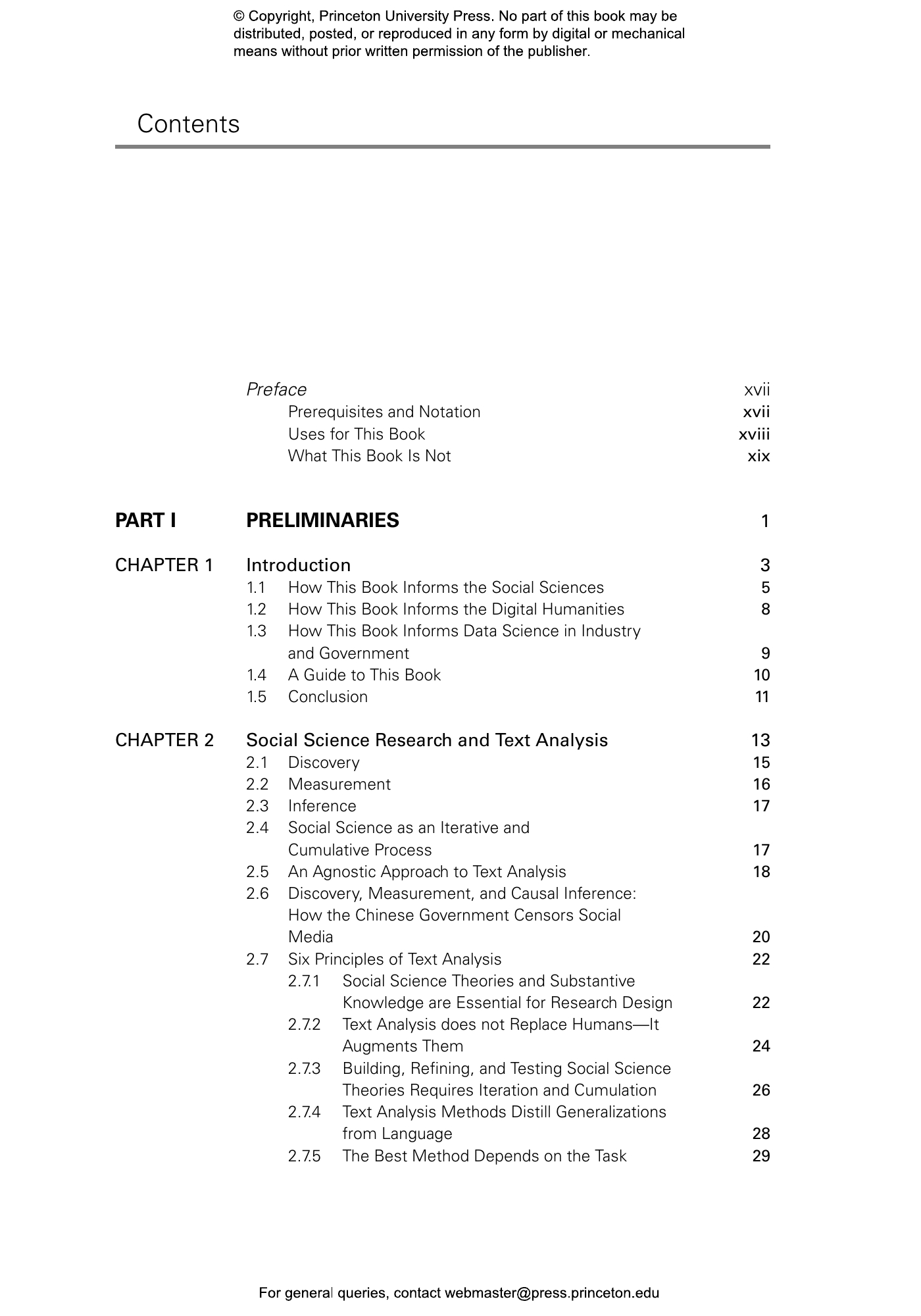

- Preface

- Prerequisites and Notation

- Uses for This Book

- What This Book Is Not

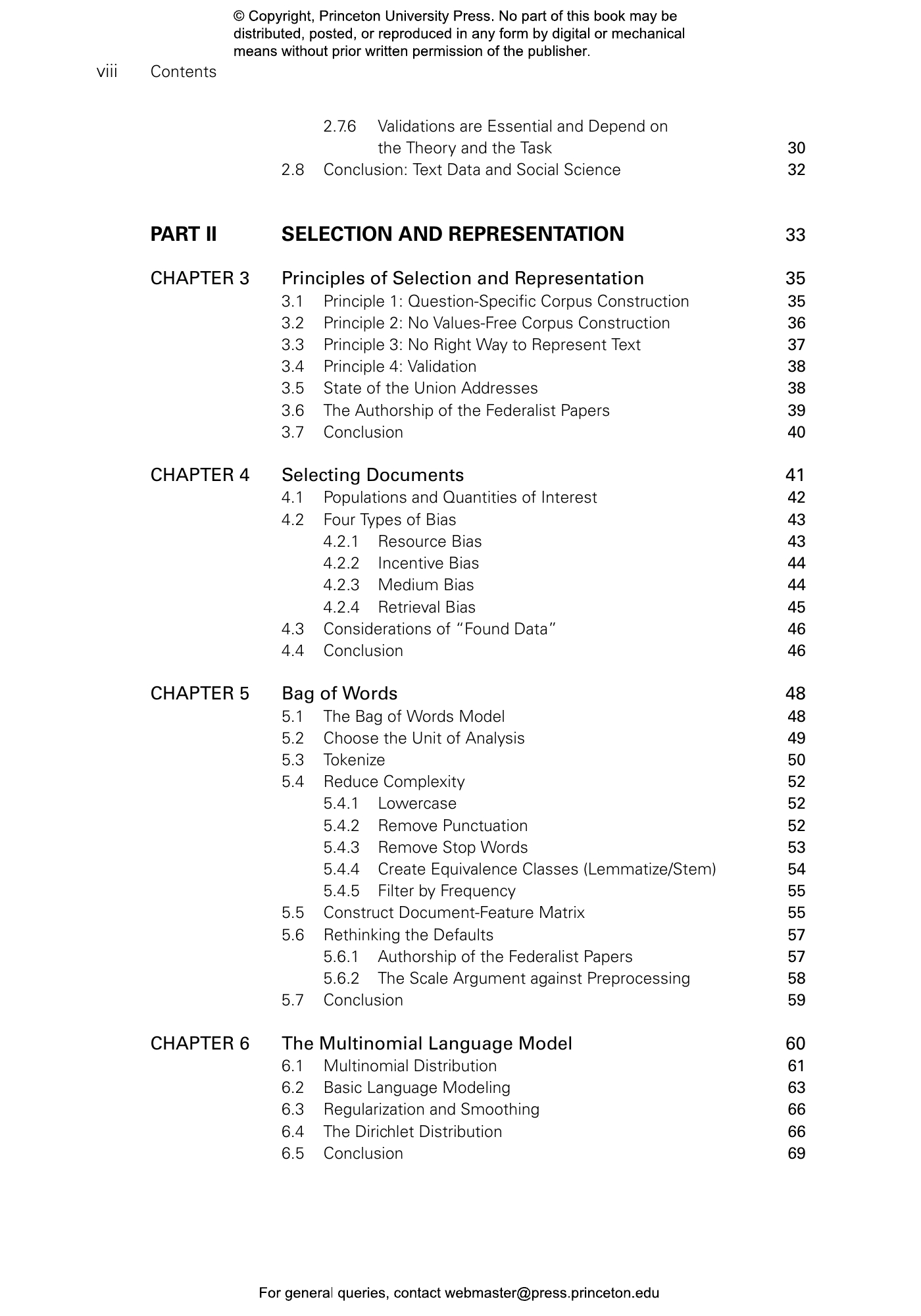

- PART I PRELIMINARIES

- CHAPTER 1 Introduction

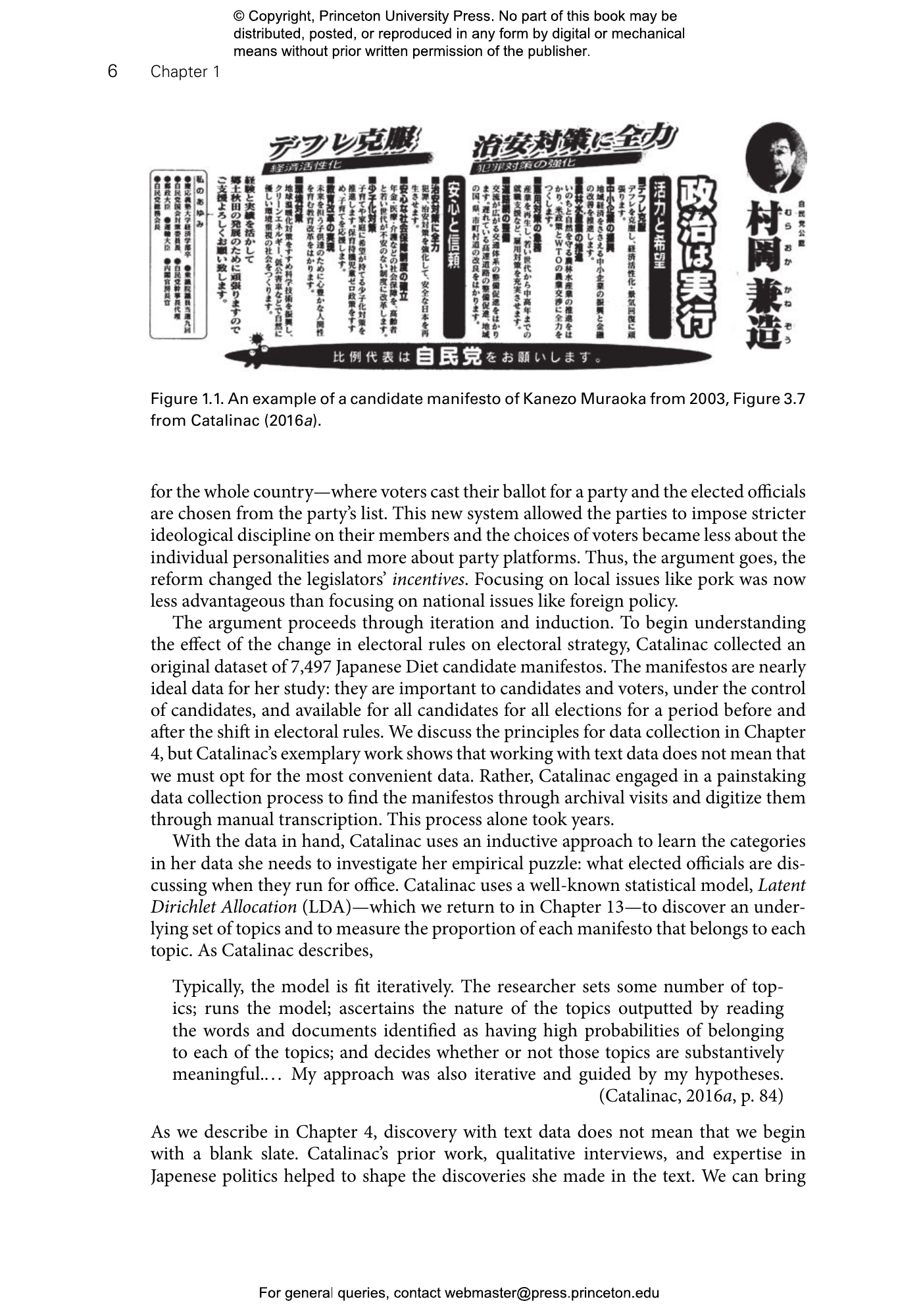

- 1.1 How This Book Informs the Social Sciences

- 1.2 How This Book Informs the Digital Humanities

- 1.3 How This Book Informs Data Science in Industry and Government

- 1.4 A Guide to This Book

- 1.5 Conclusion

- CHAPTER 2 Social Science Research and Text Analysis

- 2.1 Discovery

- 2.2 Measurement

- 2.3 Inference

- 2.4 Social Science as an Iterative and Cumulative Process

- 2.5 An Agnostic Approach to Text Analysis

- 2.6 Discovery, Measurement, and Causal Inference: How the Chinese Government Censors Social Media

- 2.7 Six Principles of Text Analysis

- 2.7.1 Social Science Theories and Substantive Knowledge are Essential for Research Design

- 2.7.2 Text Analysis does not Replace Humans—It Augments Them

- 2.7.3 Building, Refining, and Testing Social Science Theories Requires Iteration and Cumulation

- 2.7.4 Text Analysis Methods Distill Generalizations from Language

- 2.7.5 The Best Method Depends on the Task

- 2.7.6 Validations are Essential and Depend on the Theory and the Task

- 2.8 Conclusion: Text Data and Social Science

- PART II SELECTION AND REPRESENTATION

- CHAPTER 3 Principles of Selection and Representation

- 3.1 Principle 1: Question-Specific Corpus Construction

- 3.2 Principle 2: No Values-Free Corpus Construction

- 3.3 Principle 3: No Right Way to Represent Text

- 3.4 Principle 4: Validation

- 3.5 State of the Union Addresses

- 3.6 The Authorship of the Federalist Papers

- 3.7 Conclusion

- CHAPTER 4 Selecting Documents

- 4.1 Populations and Quantities of Interest

- 4.2 Four Types of Bias

- 4.2.1 Resource Bias

- 4.2.2 Incentive Bias

- 4.2.3 Medium Bias

- 4.2.4 Retrieval Bias

- 4.3 Considerations of “Found Data”

- 4.4 Conclusion

- CHAPTER 5 Bag of Words

- 5.1 The Bag of Words Model

- 5.2 Choose the Unit of Analysis

- 5.3 Tokenize

- 5.4 Reduce Complexity

- 5.4.1 Lowercase

- 5.4.2 Remove Punctuation

- 5.4.3 Remove Stop Words

- 5.4.4 Create Equivalence Classes (Lemmatize/Stem)

- 5.4.5 Filter by Frequency

- 5.5 Construct Document-Feature Matrix

- 5.6 Rethinking the Defaults

- 5.6.1 Authorship of the Federalist Papers

- 5.6.2 The Scale Argument against Preprocessing

- 5.7 Conclusion

- CHAPTER 6 The Multinomial Language Model

- 6.1 Multinomial Distribution

- 6.2 Basic Language Modeling

- 6.3 Regularization and Smoothing

- 6.4 The Dirichlet Distribution

- 6.5 Conclusion

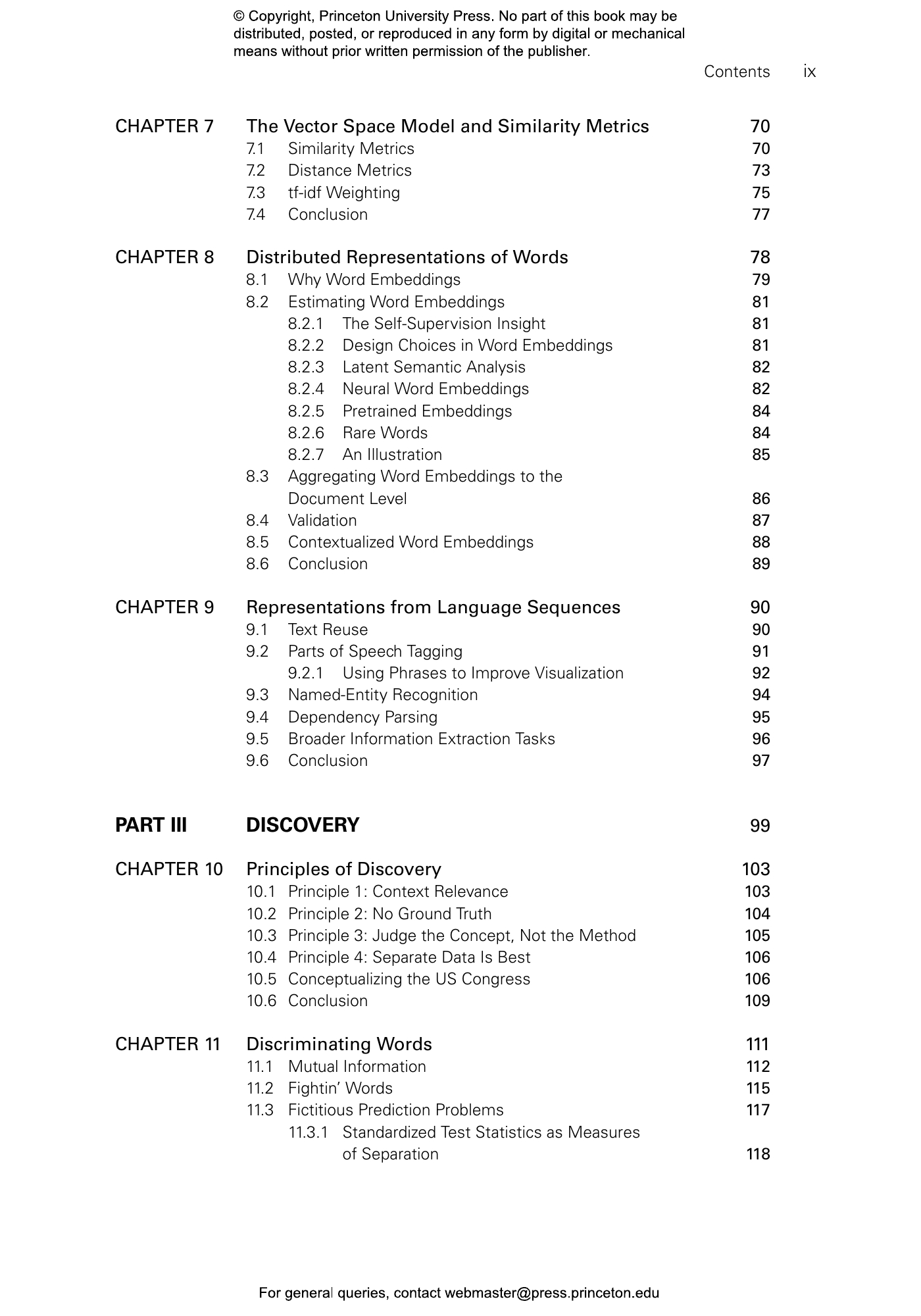

- CHAPTER 7 The Vector Space Model and Similarity Metrics

- 7.1 Similarity Metrics

- 7.2 Distance Metrics

- 7.3 tf-idf Weighting

- 7.4 Conclusion

- CHAPTER 8 Distributed Representations of Words

- 8.1 Why Word Embeddings

- 8.2 Estimating Word Embeddings

- 8.2.1 The Self-Supervision Insight

- 8.2.2 Design Choices in Word Embeddings

- 8.2.3 Latent Semantic Analysis

- 8.2.4 Neural Word Embeddings

- 8.2.5 Pretrained Embeddings

- 8.2.6 Rare Words

- 8.2.7 An Illustration

- 8.3 Aggregating Word Embeddings to the Document Level

- 8.4 Validation

- 8.5 Contextualized Word Embeddings

- 8.6 Conclusion

- CHAPTER 9 Representations from Language Sequences

- 9.1 Text Reuse

- 9.2 Parts of Speech Tagging

- 9.2.1 Using Phrases to Improve Visualization

- 9.3 Named-Entity Recognition

- 9.4 Dependency Parsing

- 9.5 Broader Information Extraction Tasks

- 9.6 Conclusion

- PART III DISCOVERY

- CHAPTER 10 Principles of Discovery

- 10.1 Principle 1: Context Relevance

- 10.2 Principle 2: No Ground Truth

- 10.3 Principle 3: Judge the Concept, Not the Method

- 10.4 Principle 4: Separate Data Is Best

- 10.5 Conceptualizing the US Congress

- 10.6 Conclusion

- CHAPTER 11 Discriminating Words

- 11.1 Mutual Information

- 11.2 Fightin’ Words

- 11.3 Fictitious Prediction Problems

- 11.3.1 Standardized Test Statistics as Measures of Separation

- 11.3.2 χ2 Test Statistics

- 11.3.3 Multinomial Inverse Regression

- 11.4 Conclusion

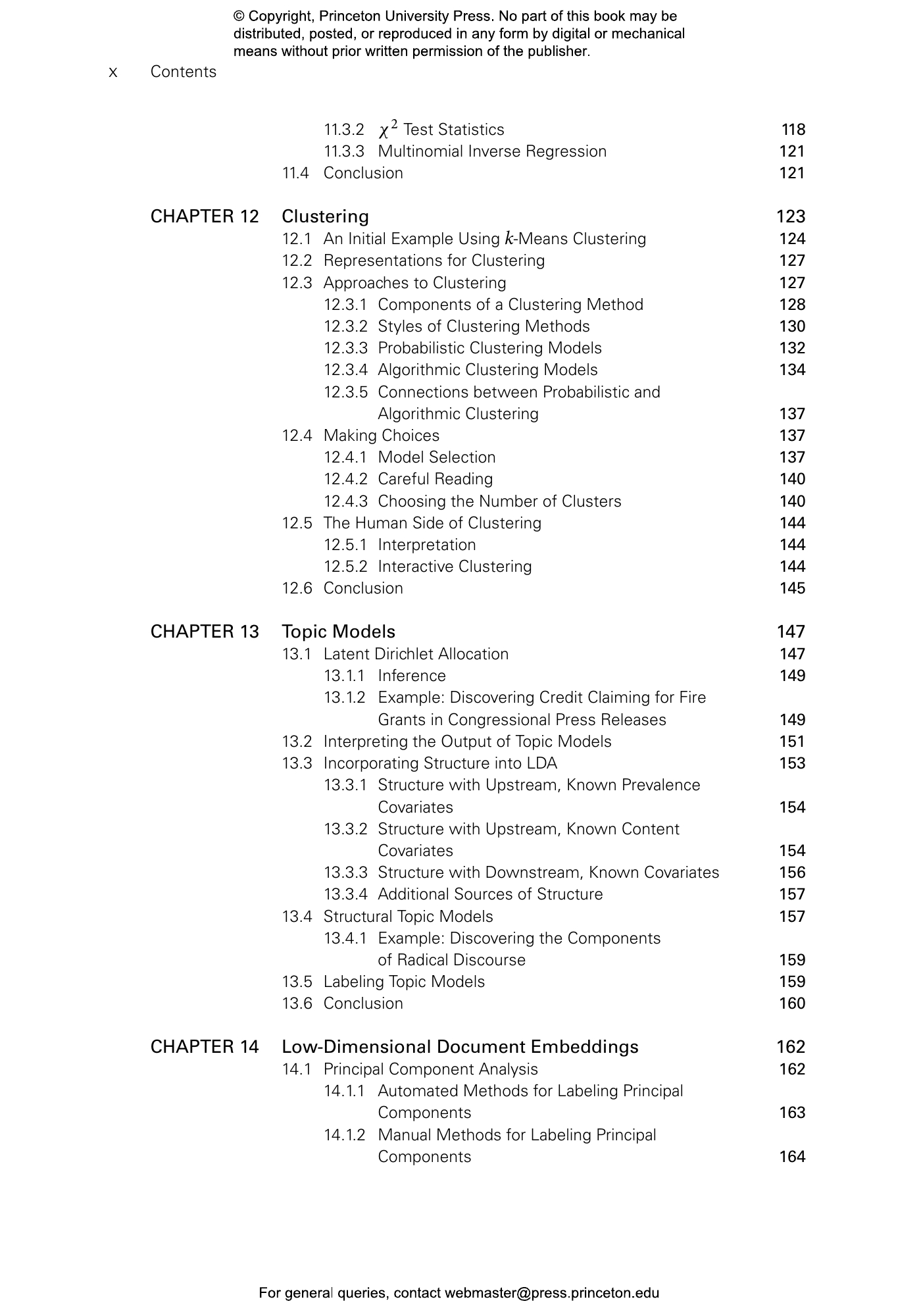

- CHAPTER 12 Clustering

- 12.1 An Initial Example Using k-Means Clustering

- 12.2 Representations for Clustering

- 12.3 Approaches to Clustering

- 12.3.1 Components of a Clustering Method

- 12.3.2 Styles of Clustering Methods

- 12.3.3 Probabilistic Clustering Models

- 12.3.4 Algorithmic Clustering Models

- 12.3.5 Connections between Probabilistic and Algorithmic Clustering

- 12.4 Making Choices

- 12.4.1 Model Selection

- 12.4.2 Careful Reading

- 12.4.3 Choosing the Number of Clusters

- 12.5 The Human Side of Clustering

- 12.5.1 Interpretation

- 12.5.2 Interactive Clustering

- 12.6 Conclusion

- CHAPTER 13 Topic Models

- 13.1 Latent Dirichlet Allocation

- 13.1.1 Inference

- 13.1.2 Example: Discovering Credit Claiming for Fire Grants in Congressional Press Releases

- 13.2 Interpreting the Output of Topic Models

- 13.3 Incorporating Structure into LDA

- 13.3.1 Structure with Upstream, Known Prevalence Covariates

- 13.3.2 Structure with Upstream, Known Content Covariates

- 13.3.3 Structure with Downstream, Known Covariates

- 13.3.4 Additional Sources of Structure

- 13.4 Structural Topic Models

- 13.4.1 Example: Discovering the Components of Radical Discourse

- 13.5 Labeling Topic Models

- 13.6 Conclusion

- CHAPTER 14 Low-Dimensional Document Embeddings

- 14.1 Principal Component Analysis

- 14.1.1 Automated Methods for Labeling Principal Components

- 14.1.2 Manual Methods for Labeling Principal Components

- 14.1.3 Principal Component Analysis of Senate Press Releases

- 14.1.4 Choosing the Number of Principal Components

- 14.2 Classical Multidimensional Scaling

- 14.2.1 Extensions of Classical MDS

- 14.2.2 Applying Classical MDS to Senate Press Releases

- 14.3 Conclusion

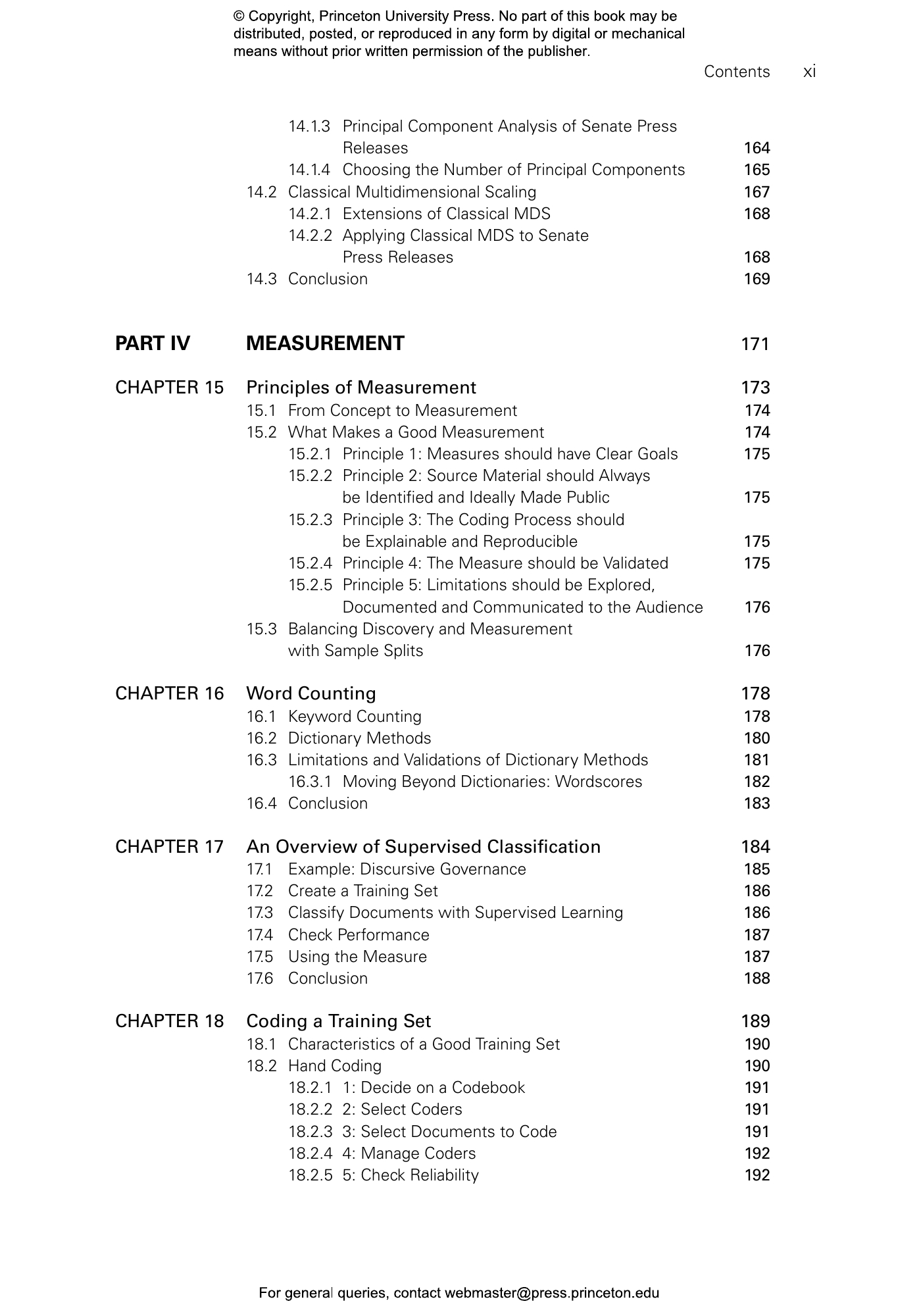

- PART IV MEASUREMENT

- CHAPTER 15 Principles of Measurement

- 15.1 From Concept to Measurement

- 15.2 What Makes a Good Measurement

- 15.2.1 Principle 1: Measures should have Clear Goals

- 15.2.2 Principle 2: Source Material should Always be Identified and Ideally Made Public

- 15.2.3 Principle 3: The Coding Process should be Explainable and Reproducible

- 15.2.4 Principle 4: The Measure should be Validated

- 15.2.5 Principle 5: Limitations should be Explored, Documented and Communicated to the Audience

- 15.3 Balancing Discovery and Measurement with Sample Splits

- CHAPTER 16 Word Counting

- 16.1 Keyword Counting

- 16.2 Dictionary Methods

- 16.3 Limitations and Validations of Dictionary Methods

- 16.3.1 Moving Beyond Dictionaries: Wordscores

- 16.4 Conclusion

- CHAPTER 17 An Overview of Supervised Classification

- 17.1 Example: Discursive Governance

- 17.2 Create a Training Set

- 17.3 Classify Documents with Supervised Learning

- 17.4 Check Performance

- 17.5 Using the Measure

- 17.6 Conclusion

- CHAPTER 18 Coding a Training Set

- 18.1 Characteristics of a Good Training Set

- 18.2 Hand Coding

- 18.2.1 1: Decide on a Codebook

- 18.2.2 2: Select Coders

- 18.2.3 3: Select Documents to Code

- 18.2.4 4: Manage Coders

- 18.2.5 5: Check Reliability

- 18.2.6 Managing Drift

- 18.2.7 Example: Making the News

- 18.3 Crowdsourcing

- 18.4 Supervision with Found Data

- 18.5 Conclusion

- CHAPTER 19 Classifying Documents with Supervised Learning

- 19.1 Naive Bayes

- 19.1.1 The Assumptions in Naive Bayes are Almost Certainly Wrong

- 19.1.2 Naive Bayes is a Generative Model

- 19.1.3 Naive Bayes is a Linear Classifier

- 19.2 Machine Learning

- 19.2.1 Fixed Basis Functions

- 19.2.2 Adaptive Basis Functions

- 19.2.3 Quantification

- 19.2.4 Concluding Thoughts on Supervised Learning with Random Samples

- 19.3 Example: Estimating Jihad Scores

- 19.4 Conclusion

- CHAPTER 20 Checking Performance

- 20.1 Validation with Gold-Standard Data

- 20.1.1 Validation Set

- 20.1.2 Cross-Validation

- 20.1.3 The Importance of Gold-Standard Data

- 20.1.4 Ongoing Evaluations

- 20.2 Validation without Gold-Standard Data

- 20.2.1 Surrogate Labels

- 20.2.2 Partial Category Replication

- 20.2.3 Nonexpert Human Evaluation

- 20.2.4 Correspondence to External Information

- 20.3 Example: Validating Jihad Scores

- 20.4 Conclusion

- CHAPTER 21 Repurposing Discovery Methods

- 21.1 Unsupervised Methods Tend to Measure Subject Better than Subtleties

- 21.2 Example: Scaling via Differential Word Rates

- 21.3 A Workflow for Repurposing Unsupervised Methods for Measurement

- 21.3.1 1: Split the Data

- 21.3.2 2: Fit the Model

- 21.3.3 3: Validate the Model

- 21.3.4 4: Fit to the Test Data and Revalidate

- 21.4 Concerns in Repurposing Unsupervised Methods for Measurement

- 21.4.1 Concern 1: The Method Always Returns a Result

- 21.4.2 Concern 2: Opaque Differences in Estimation Strategies

- 21.4.3 Concern 3: Sensitivity to Unintuitive Hyperparameters

- 21.4.4 Concern 4: Instability in results

- 21.4.5 Rethinking Stability

- 21.5 Conclusion

- PART V INFERENCE

- CHAPTER 22 Principles of Inference

- 22.1 Prediction

- 22.2 Causal Inference

- 22.2.1 Causal Inference Places Identification First

- 22.2.2 Prediction Is about Outcomes That Will Happen, Causal Inference is about Outcomes from Interventions

- 22.2.3 Prediction and Causal Inference Require Different Validations

- 22.2.4 Prediction and Causal Inference Use Features Differently

- 22.3 Comparing Prediction and Causal Inference

- 22.4 Partial and General Equilibrium in Prediction and Causal Inference

- 22.5 Conclusion

- CHAPTER 23 Prediction

- 23.1 The Basic Task of Prediction

- 23.2 Similarities and Differences between Prediction and Measurement

- 23.3 Five Principles of Prediction

- 23.3.1 Predictive Features do not have to Cause the Outcome

- 23.3.2 Cross-Validation is not Always a Good Measure of Predictive Power

- 23.3.3 It’s Not Always Better to be More Accurate on Average

- 23.3.4 There can be Practical Value in Interpreting Models for Prediction

- 23.3.5 It can be Difficult to Apply Prediction to Policymaking

- 23.4 Using Text as Data for Prediction: Examples

- 23.4.1 Source Prediction

- 23.4.2 Linguistic Prediction

- 23.4.3 Social Forecasting

- 23.4.4 Nowcasting

- 23.5 Conclusion

- CHAPTER 24 Causal Inference

- 24.1 Introduction to Causal Inference

- 24.2 Similarities and Differences between Prediction and Measurement, and Causal Inference

- 24.3 Key Principles of Causal Inference with Text

- 24.3.1 The Core Problems of Causal Inference Remain, even when Working with Text

- 24.3.2 Our Conceptualization of the Treatment and Outcome Remains a Critical Component of Causal Inference with Text

- 24.3.3 The Challenges of Making Causal Inferences with Text Underscore the Need for Sequential Science

- 24.4 The Mapping Function

- 24.4.1 Causal Inference with g

- 24.4.2 Identification and Overfitting

- 24.5 Workflows for Making Causal Inferences with Text

- 24.5.1 Define g before Looking at the Documents

- 24.5.2 Use a Train/Test Split

- 24.5.3 Run Sequential Experiments

- 24.6 Conclusion

- CHAPTER 25 Text as Outcome

- 25.1 An Experiment on Immigration

- 25.2 The Effect of Presidential Public Appeals

- 25.3 Conclusion

- CHAPTER 26 Text as Treatment

- 26.1 An Experiment Using Trump’s Tweets

- 26.2 A Candidate Biography Experiment

- 26.3 Conclusion

- CHAPTER 27 Text as Confounder

- 27.1 Regression Adjustments for Text Confounders

- 27.2 Matching Adjustments for Text

- 27.3 Conclusion

- PART VI CONCLUSION

- CHAPTER 28 Conclusion

- 28.1 How to Use Text as Data in the Social Sciences

- 28.1.1 The Focus on Social Science Tasks

- 28.1.2 Iterative and Sequential Nature of the Social Sciences

- 28.1.3 Model Skepticism and the Application of Machine Learning to the Social Sciences

- 28.2 Applying Our Principles beyond Text Data

- 28.3 Avoiding the Cycle of Creation and Destruction in Social Science Methodology

- Acknowledgments

- Bibliography

- Index

"Among the metaverse of possible books on Text as Data that could have been published . . . I was pleased that my universe produced this one. I will assign this book as a critical part of my own course on content analysis for years to come, and it has already altered and improved the coherence of my own vocabulary and articulation for several critical choices underlying the process of turning text into data. . . . Highly recommend."—James Evans, Sociological Methods & Research

"This is the definitive guide for social scientists wishing to work with text-based data. Written by pioneers in the field, Text as Data provides a comprehensive overview of the state of the art. But the authors don’t stop there: they offer a fresh agenda for doing social science, showing how algorithms can augment our ability to develop theories of human behavior, rather than poorly attempting to replace us.”—Chris Bail, author of Breaking the Social Media Prism

“Text as Data is a long-awaited book by an all-star team of methodologists. The explosion of textual data provides unprecedented opportunities to learn about human behavior and society at a massive scale. Through this authoritative book, Grimmer, Roberts, and Stewart lay the foundation of text analysis for students and researchers.”—Kosuke Imai, author of Quantitative Social Science

“This book provides a clear and comprehensive introduction to the key computational techniques for analyzing text data. The technical material is contextualized within a broader research philosophy that will drive exciting new applications in computational social science, the digital humanities, and commercial data science. I highly recommend it.”—Jacob Eisenstein, author of Introduction to Natural Language Processing

“Beyond offering an engaging survey of text analysis methods, this book is a vital guide to social science research design. Diverse applications from detecting Chinese censorship to classifying jihadist texts bring text analysis to life for readers of all methodological backgrounds. My students praised Text as Data as one of the best textbooks they have encountered.”—Alexandra Siegel, University of Colorado, Boulder

"This book fills acute gaps in the theory components of text as data. Accessible to advanced undergraduates and graduate students with some background in social science terminology and methodology, this volume draws together aspects of text-as-data approaches that are often discussed and applied separately, and brings them into a coherent framework."—Sarah Bouchat, Northwestern University

"Written by leaders in the discipline, Text as Data is an excellent book. Comprehensive in its scope, this work is a perfect introduction for social science graduate students and faculty getting into the field."—Arthur Spirling, New York University

"There is a clear lack of relevant textbooks in the social science text-as-data area. Thorough and manageable, Text as Data presents a good conceptual overview and frames issues at the right level."—David Mimno, Cornell University