Confused about AI and worried about what it means for your future and the future of the world? You’re not alone. AI is everywhere—and few things are surrounded by so much hype, misinformation, and misunderstanding. In AI Snake Oil, computer scientists Arvind Narayanan and Sayash Kapoor cut through the confusion to give you an essential understanding of how AI works and why it often doesn’t, where it might be useful or harmful, and when you should suspect that companies are using AI hype to sell AI snake oil—products that don’t work, and probably never will.

While acknowledging the potential of some AI, such as ChatGPT, AI Snake Oil uncovers rampant misleading claims about the capabilities of AI and describes the serious harms AI is already causing in how it’s being built, marketed, and used in areas such as education, medicine, hiring, banking, insurance, and criminal justice. The book explains the crucial differences between types of AI, why organizations are falling for AI snake oil, why AI can’t fix social media, why AI isn’t an existential risk, and why we should be far more worried about what people will do with AI than about anything AI will do on its own. The book also warns of the dangers of a world where AI continues to be controlled by largely unaccountable big tech companies.

By revealing AI’s limits and real risks, AI Snake Oil will help you make better decisions about whether and how to use AI at work and home.

Awards and Recognition

- A Publishers Weekly Fall Science Preview Top 10

"As artificial intelligence proliferates, more and more hinges on our ability to articulate our own value. We seem to be on the cusp of a world in which workers of all kinds—teachers, doctors, writers, photographers, lawyers, coders, clerks, and more—will be replaced with, or to some degree sidelined by, their A.I. equivalents. What will get left out when A.I. steps in? . . . . [Narayanan and Kapoor] approach the question on a practical level. They urge skepticism, and argue that the blanket term 'A.I.' can serve as a kind of smoke screen for underperforming technologies. . . . [AI Snake Oil isn’t] just describing A.I., which continues to evolve, but characterizing the human condition."—Joshua Rothman, The New Yorker

"The experienced authors bring a wealth of knowledge to their subject. . . . Written in language that even nontechnical readers can understand, the text provides plenty of practical suggestions that can benefit creators and users alike. It’s also worth noting that Narayanan and Kapoor write a regular newsletter to update their points. Highly useful advice for those who work with or are affected by AI—i.e., nearly everyone."—Kirkus, starred review

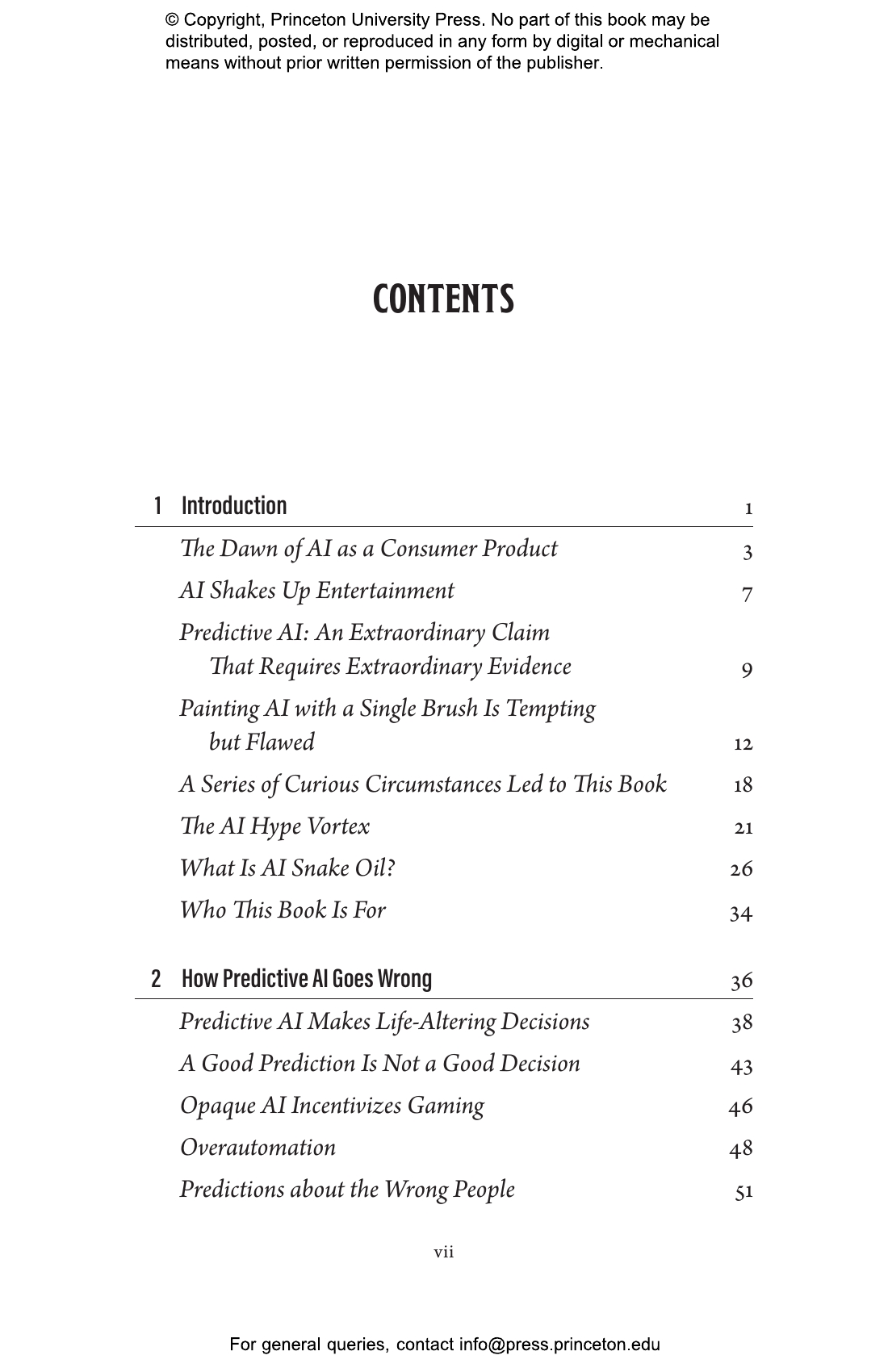

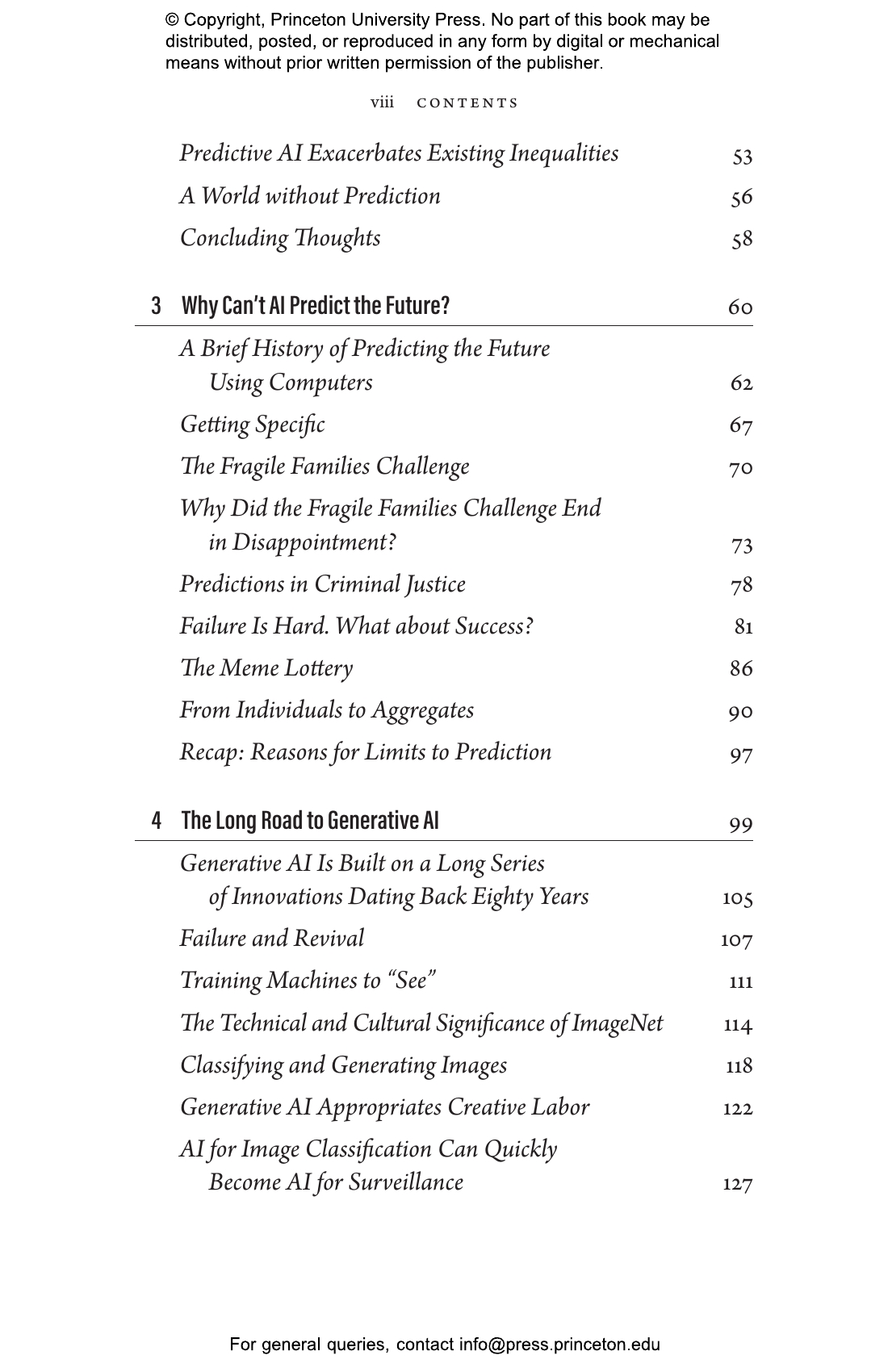

"The first step to understanding AI better is coming to terms with the vagueness of the term. . . . AI Snake Oil divides artificial intelligence into two subcategories: predictive AI, which uses data to assess future outcomes; and generative AI, which crafts probable answers to prompts based on past data. It’s worth it for anyone who encounters AI tools, willingly or not, to spend at least a little time trying to better grasp key concepts."—Reece Rogers, WIRED

"A worthwhile read whether you make policy decisions, use AI in the workplace or just spend time searching online. It’s a powerful reminder of how AI has already infiltrated our lives—and a convincing plea to take care in how we interact with it."—Elizabeth Quill, Science News

"Effectively translates the technical to the practical."—John Warner, Inside Higher Ed

"[A] solid overview of AI’s defects."—Publishers Weekly

"Arvind Narayanan and Sayash Kapoor write about AI capabilities in a wide range of settings, from criminal sentencing to moderating social media. My favorite part of the book is Chapter 5, which takes the arguments of AI doomers head-on."—Timothy B. Lee, Understanding AI

"The authors admirably differentiate fact from opinion, draw from personal experience, give sensible reasons for their views (including copious references), and don’t hesitate to call for action. . . . If you’re curious about AI or deciding how to implement it, AI Snake Oil offers clear writing and level-headed thinking."—Jean Gazis, PracticalEcommerce

"Well-informed, clear and persuasive—the cool breeze of knowledge and good sense are a good antidote to anybody inclined to believe the hyped claims and fears."—Diane Coyle, Enlightened Economist

"This book is an easy read for anyone interested in artificial intelligence (AI). It is a wide subject, with categories of AI that do different things, and the authors give many good examples to educate and dispel AI myths."—Geraldine McBride, Financial World

"Narayanan and Kapoor’s efforts are clarifying. . . . They demystify the technical details behind what we call AI with ease, cutting against the deluge of corporate marketing from this sector."—Edward Ongweso Jr., The New Republic

“With a much-needed dose of skepticism, this clear and practical guide will help you understand some of the worst dangers of the current AI hype cycle.”—Kate Crawford, author of Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence

“This revelatory book exposes the ‘AI hype vortex,’ a black hole of misinformation and mythmaking propelled by desires for quick fixes and a fast buck. Narayanan and Kapoor cut through the hype with crisp and accessible writing that bravely calls out how AI fails us daily, with devastating consequences, and points to how it might one day benefit us—but only if governed in the public interest.”—Alondra Nelson, Science, Technology, and Social Values Lab, Institute for Advanced Study

“Amidst the constant swirl of hype and fear around artificial intelligence, AI Snake Oil is a breath of fresh air. This expert and entertaining guide is a must-read for anyone who wonders about AI’s potential promise and its perils.”—Melanie Mitchell, author of Artificial Intelligence: A Guide for Thinking Humans

“If you are only going to read one book on AI, make it this one. Narayanan and Kapoor make simple what everyone else is trying to make complex. Reading it makes you realize you are not crazy, the AI discourse is crazy, and they have given you a roadmap to clarity.”—Julia Angwin, author of Dragnet Nation: A Quest for Privacy, Security, and Freedom in a World of Relentless Surveillance