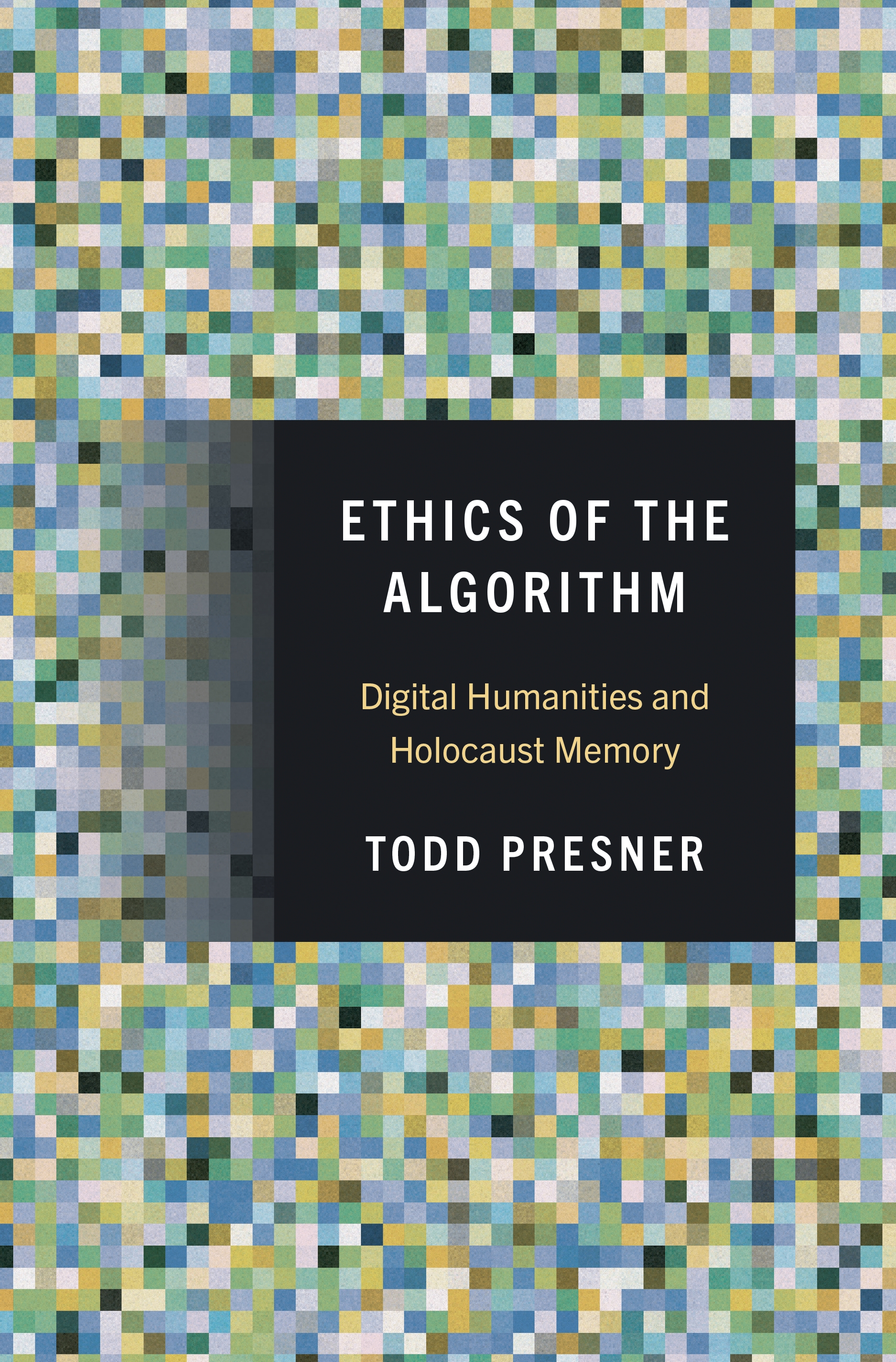

Ethics of the Algorithm: Digital Humanities and Holocaust Memory

Hardcover

- Price:

- $39.95/£35.00

- ISBN:

- Published (US):

- Sep 24, 2024

- Published (UK):

- Nov 19, 2024

- Pages:

- 456

- Size:

- 6.13 x 9.25 in.

- 108 color illus.

- Main_subject:

- History

ebook

The Holocaust is one of the most documented—and now digitized—events in human history. Institutions and archives hold hundreds of thousands of hours of audio and video testimony, composed of more than a billion words in dozens of languages, with millions of pieces of descriptive metadata. It would take several lifetimes to engage with these testimonies one at a time. Computational methods could be used to analyze an entire archive—but what are the ethical implications of “listening” to Holocaust testimonies by means of an algorithm? In this book, Todd Presner explores how the digital humanities can provide both new insights and humanizing perspectives for Holocaust memory and history.

Presner suggests that it is possible to develop an “ethics of the algorithm” that mediates between the ethical demands of listening to individual testimonies and the interpretative possibilities of computational methods. He delves into thousands of testimonies and witness accounts, focusing on the analysis of trauma, language, voice, genre, and the archive itself. Tracing the affordances of digital tools that range from early, proto-computational approaches to more recent uses of automatic speech recognition and natural language processing, Presner introduces readers to what may be the ultimate expression of these methods: AI-driven testimonies that use machine learning to process responses to questions, offering a user experience that seems to replicate an actual conversation with a Holocaust survivor.

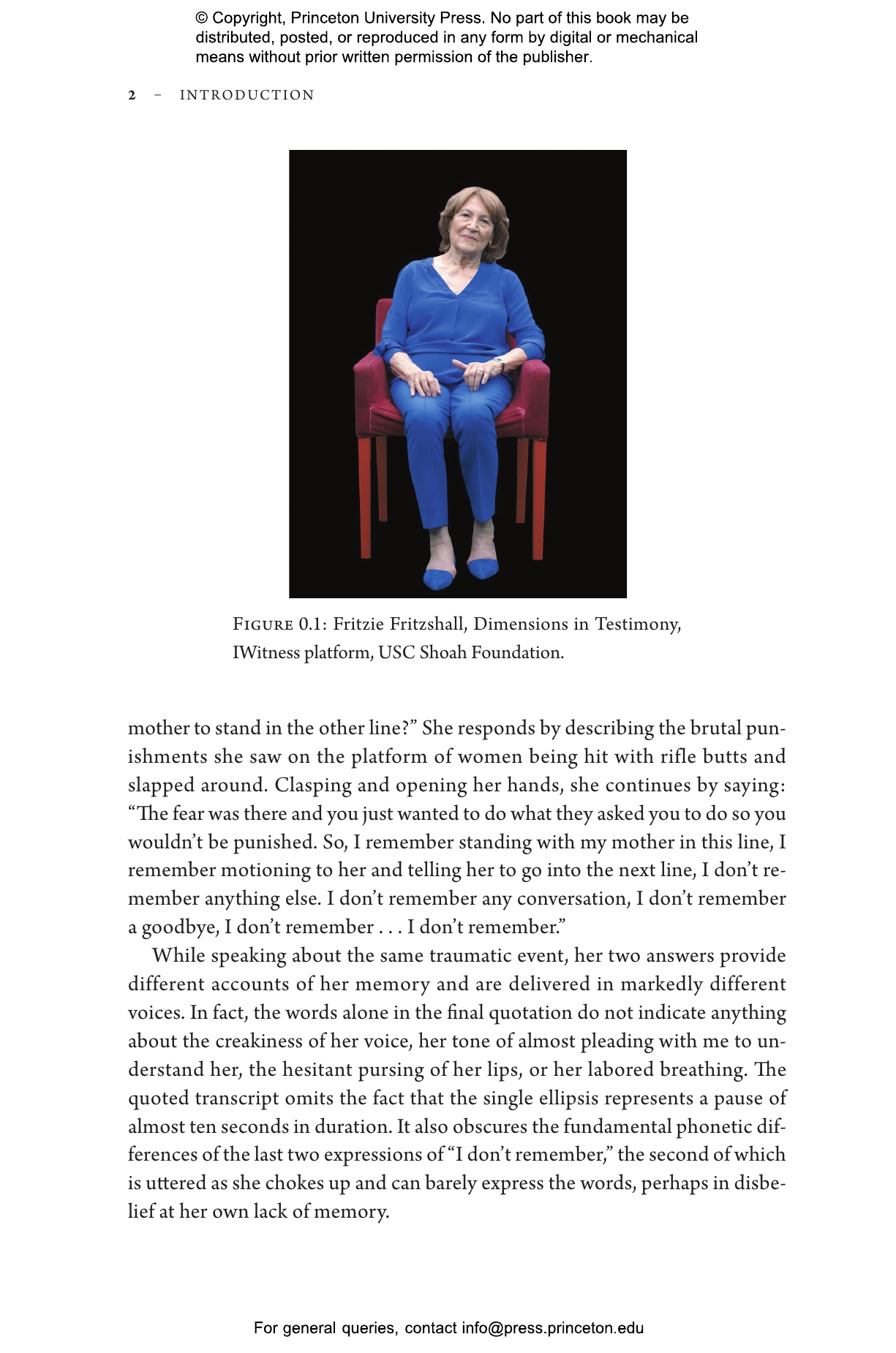

With Ethics of the Algorithm, Presner presents a digital humanities argument for how big data models and computational methods can be used to preserve and perpetuate cultural memory.